About Me

I am a doctoral student in the Distributed RObotics and Networked Embedded Sensing (DRONES) Lab at the University at Buffalo (UB), advised by Dr. Karthik Dantu (Dept. of Computer Science and Engineering), co-advised by Dr. John Crassidis in the Advanced Navigation and Control Systems (ANCS) Lab (Dept. of Mechanical and Aerospace Engineering). I am a current Pathways Student at NASA Goddard Space Flight Center (GSFC) in the Science Data Processing branch (code 587).

My research interests include spacecraft perception and autonomy, optical navigation systems, simultaneous localization and mapping (SLAM), embedded computing, and computer vision. My current research is focused on unsupervised, generative, and representation learning-based solutions for space-vision tasks such as visual terrain detection, scene reconstruction, and landmark recognition. In the past, I have also worked on dynamic feature reasoning, 3D feature location estimation, and sim-to-real domain adaptation.

My research interests include spacecraft perception and autonomy, optical navigation systems, simultaneous localization and mapping (SLAM), embedded computing, and computer vision. My current research is focused on unsupervised, generative, and representation learning-based solutions for space-vision tasks such as visual terrain detection, scene reconstruction, and landmark recognition. In the past, I have also worked on dynamic feature reasoning, 3D feature location estimation, and sim-to-real domain adaptation.

Education

Aug. 2020 - Present

University at Buffalo

Ph.D. Candidate, Computer Science and Engineering

Distributed RObotics and Networked Embedded Sensing (DRONES) Lab (Dr. Karthik Dantu)

Advanced Navigation and Control Systems (ANCS) Lab (Dr. John Crassidis)

Distributed RObotics and Networked Embedded Sensing (DRONES) Lab (Dr. Karthik Dantu)

Advanced Navigation and Control Systems (ANCS) Lab (Dr. John Crassidis)

Aug. 2020 - Feb. 2023

University at Buffalo

M.S. in Computer Science and Engineering

Aug. 2016 - May 2020

University at Buffalo

B.S. in Computer Science

Certification, Data-Intensive Computing

Certification, Data-Intensive Computing

Experience

May 2018 - Present

NASA Goddard Space Flight Center

Student Researcher/Software Engineer, Science Data Processing

Sep. 2019 - Jan. 2020

NASA Jet Propulsion Laboratory

Software Engineer, Intern, Mobility and Robotic Systems

Jan. 2019 - Jan. 2020

NOVI Aerospace

Data Scientist, Intern

Mar. 2016 - May. 2020

UB Nanosatellite Laboratory

Flight Software Lead

Featured Publications

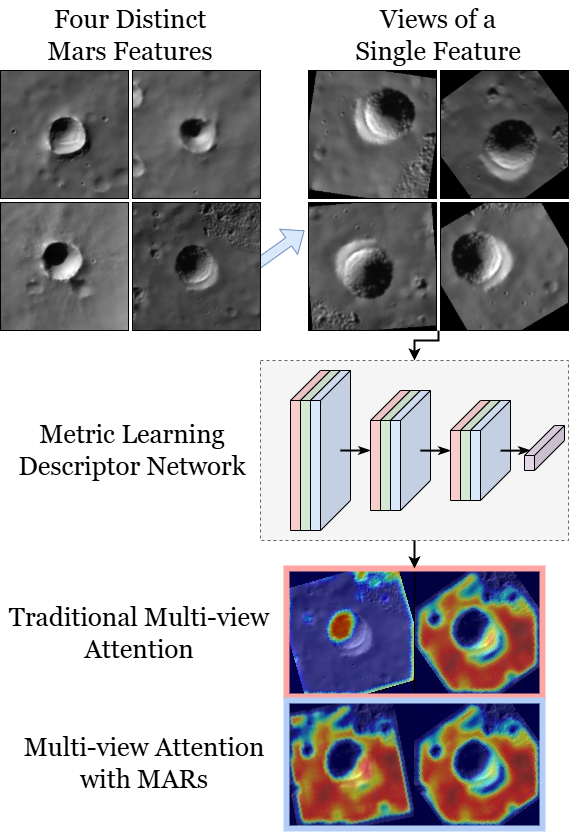

MARs: Multi-view Attention Regularizations for Patch-based Feature Recognition of Space Terrain

European Conference on Computer Vision (ECCV), 2024

The visual tracking of surface terrain is required for spacecraft to land on or navigate within close proximity to celestial objects, where current approaches rely on template matching with pre-gathered patch-based features. While recent literature has focused on in-situ detection methods, robust description is still needed. In this work, we explore metric learning as the lightweight feature description mechanism and find that current solutions fail to address inter-class similarity and multi-view observational geometry. We attribute this to the view-unaware attention mechanism and introduce Multi-view Attention Regularizations (MARs) to constrain the channel and spatial attention across multiple feature views. We analyze many modern metric learning losses with and without MARs and demonstrate improved terrain-feature recognition performance by upwards of 85%. We additionally introduce the Luna-1 dataset, consisting of Moon crater landmarks and reference navigation frames from NASA mission data to support future research.

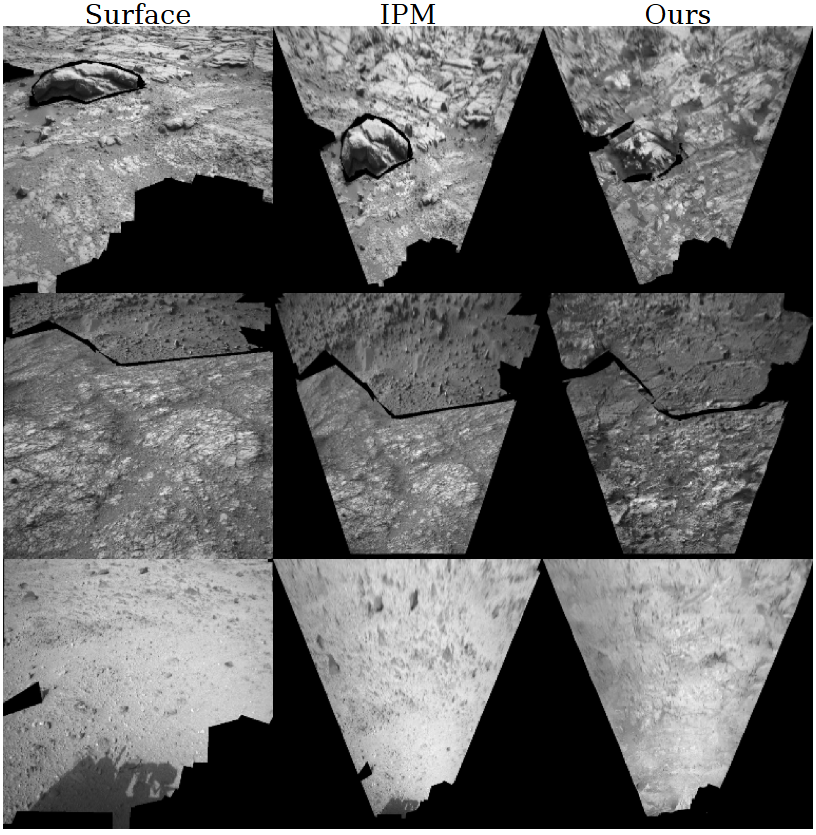

Unsupervised Surface-to-Orbit View Generation of Planetary Terrain

IEEE Aerospace Conference, 2024

We present a generative approach to orbital-style view synthesis that improves the visual fidelity of Inverse Perspective image tranformations (IPMs) on planetary surface terrain. We describe how to condition generative model learning on input signals given only by surface and IPM images permitting an entirely unsupervised approach. We show consistency in both feature structure and location, allowing for the mapping of auxiliary information like semantic pixel labels. Through in-depth qualitative and quantitative analysis, we demonstrate the ability of our method to create less-deformed, more realistic images that improve downstream learning tasks.

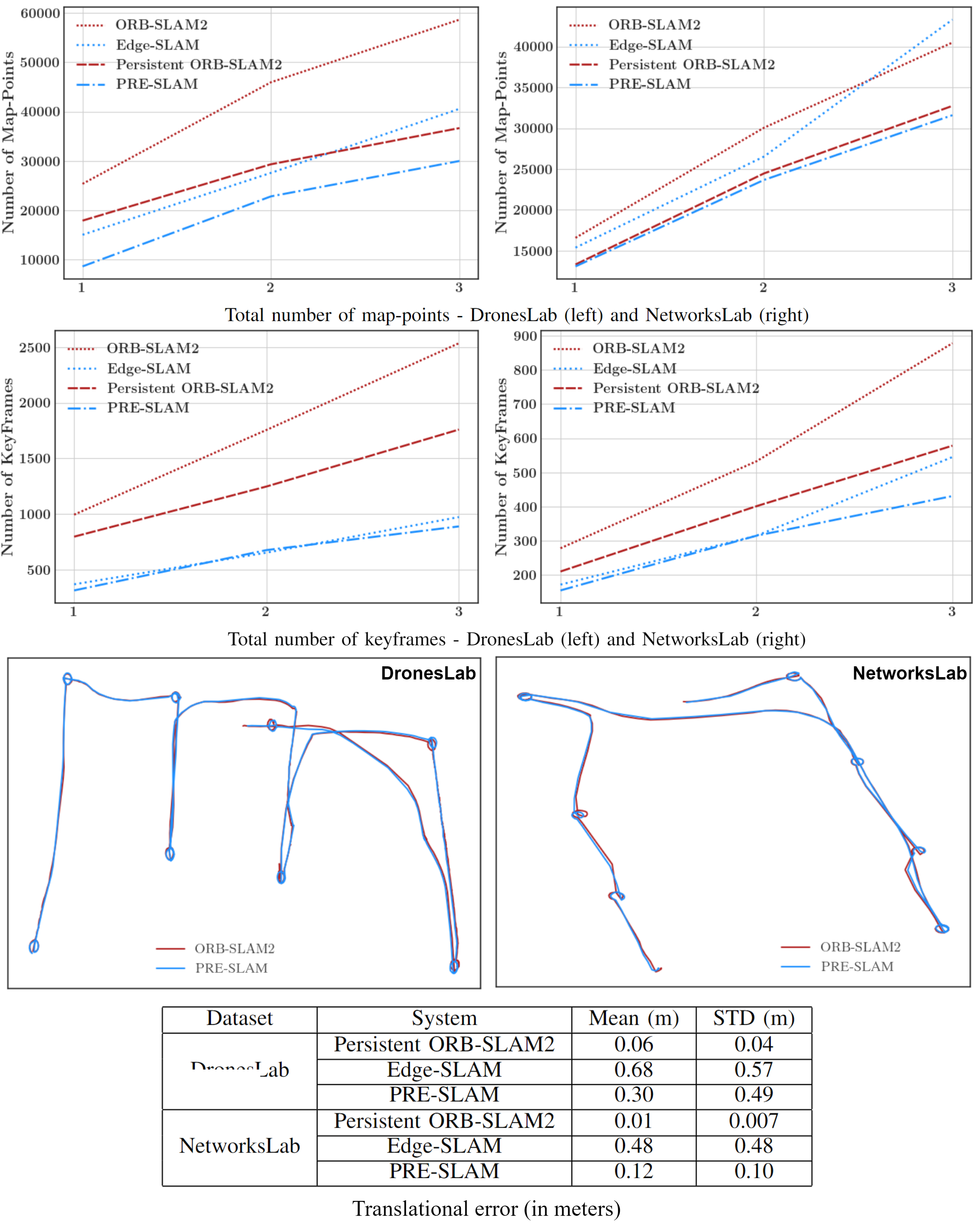

PRE-SLAM: Persistence Reasoning in Edge-assisted Visual SLAM

IEEE Conference on Mobile Ad Hoc and Smart Systems (MASS), 2022

We introduce PRE-SLAM, an edge-assisted visual SLAM system that incorporates feature persistence filtering. We revisit the centralized persistence filter architecture and make a series of modifications to allow for dynamic feature filtering in an edge-assisted setting. Using two locally collected datasets, we show how our split persistence filter implementation reduces map-point and keyframe retention by 26.6% and 16.6% respectively. By filtering out dynamic map-points from the system, we demonstrate an improvement in average localization accuracy by more than 50%. We also demonstrate how incorporating feature persistence filtering into Edge-SLAM retains the key benefits and performance enhancements of an edge-assisted Visual-SLAM system, with an added communication overhead of only 500 KB while decreasing overall map size by 8.6%.

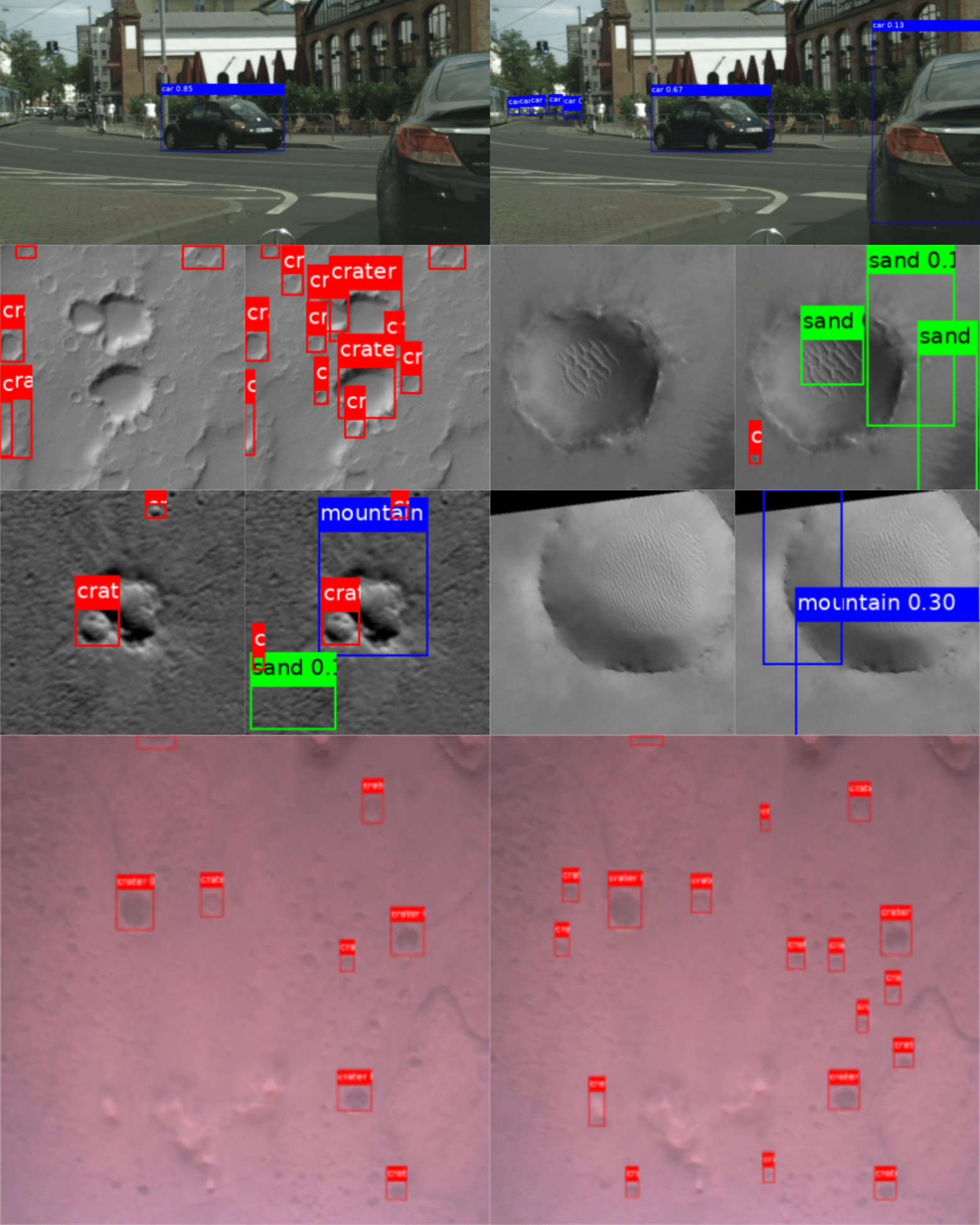

You Only Crash Once: Improved Object Detection for Real-Time, Sim-to-Real Hazardous Terrain Detection and Classification for Autonomous Planetary Landings

AAS/AIAA Astrodynamics Specialist Conference, 2022

In this work, we introduce You Only Crash Once (YOCO), a learning-based visual hazardous terrain detection and classification technique for autonomous spacecraft planetary landings. Through the use of unsupervised domain adaptation we tailor YOCO for training by simulation, removing the need for real-world annotated data and expensive mission surveying phases. We further improve the transfer of representative terrain knowledge between simulation and the real world through visual similarity clustering. We demonstrate the utility of YOCO through a series of terrestrial and extraterrestrial simulation-to-real experiments and show substantial improvements toward the ability to both detect and accurately classify instances of planetary terrain.

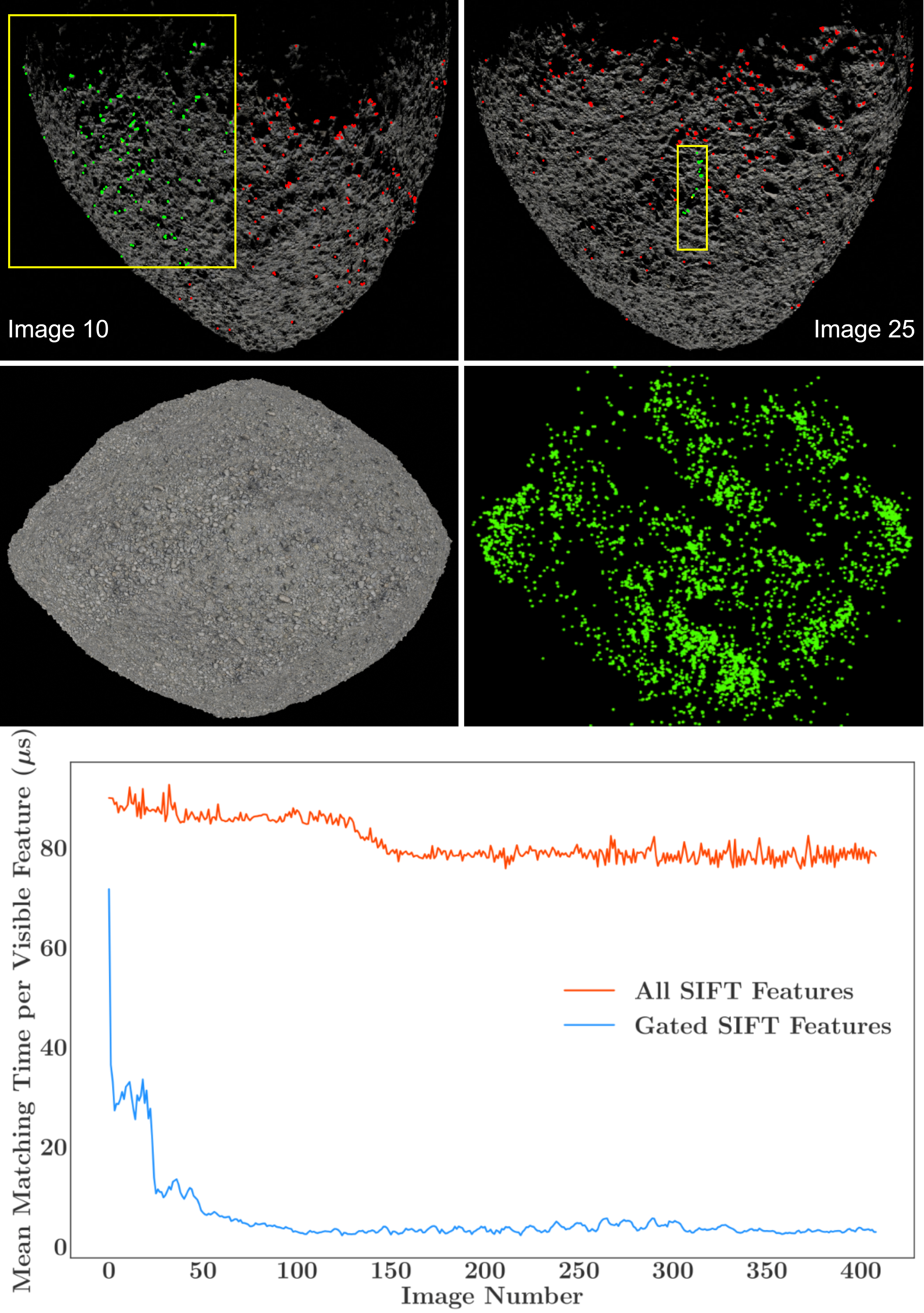

Efficient Feature Matching and Mapping for Terrain Relative Navigation Using Hypothesis Gating

AIAA SciTech Forum, 2022

This paper tackles the inaccuracies and inefficiencies of standard image feature matching processes on spaceflight processors, by leveraging traditional onboard navigation filter information to drastically reduce the number of matching candidates. Estimated feature location is used to form statistical prediction gates around a given feature, for which all points lying inside are treated as inliers and fed to the matching process. Using a simulated trajectory around a high-fidelity 3D asteroid model and a single monocular camera, we demonstrate an overall reduction of around 87% in average matching time for three popular feature description techniques. We showcase how feature gating substantially increases matching accuracy, giving utility towards purely monocular terrain relative navigation.

News

2025

2024

- Nov. 2024: One co-authored paper accepted to the 2025 AAAI Innovative Applications of Artificial Intelligence: Applications of The NASA On-Board Artificial Intelligence Research Platform.

- Oct. 2024: One co-authored paper accepted to the 2025 AAS Guidance, Navigation and Control Conference: Machine Learning based Crater Detection for Terrain Relative Navigation.

- Oct. 2024: One co-authored paper accepted to the 2025 IEEE Aerospace Conference: Evaluation and Integration of YOLO Models for Autonomous Crater Detection.

- Aug. 2024: I serve as principle investigator ($22K) of NASA research: Terrain Modeling and Landmark Navigation with Radiance Fields.

- Jul. 2024: I am a co-organizer of the Image Processing and Computer Vision session of the 2025 IEEE Aerospace Conference.

- Jul. 2024: One paper accepted to the 2024 European Conference on Computer Vision (ECCV): MARs: Multi-view Attention Regularizations for Patch-based Feature Recognition of Space Terrain.

- Jun. 2024: One co-authored paper accepted to the 2024 ESA/IAA Conference on AI in and for Space: The Onboard Artificial Intelligence Research (OnAIR) Platform.

- Jun. 2024: I serve as a summer internship mentor for four students at NASA GSFC (robotics/learning focus).

2023

- Nov. 2023: One poster presented at the 2023 Northeast Robotics Colloquium (NERC): Enhanced Visual Perception for Autonomous Spacecraft Navigation.

- Nov. 2023: Two papers accepted to the 2024 IEEE Aerospace Conference: Profiling Vision-based Deep Learning Architectures on NASA SpaceCube Platforms and Unsupervised Surface-to-Orbit View Generation of Planetary Terrain.

- Sep. 2023: I’ve received the NASA GSFC Smart Award for my nine-month mentorship and technical advisement of a Drexel University Dept. of Computer Science senior project.

- Aug. 2023: I serve as principle investigator ($27.5K) of NASA research: Towards Learning-based Visual Perception with GAVIN: the Goddard AI Verification and INtegration Tool Suite.

- Aug. 2023: One co-authored poster presented at the 2023 Small Satellite Conference (SmallSat): An Autonomous Agent Framework for Constellation Missions: A Use Case for Predicting Atmospheric CO2.

- Jun. 2023: I serve as a summer internship mentor for two students at NASA GSFC (robotics focus).

- Apr. 2023: My contributions to STP-H9 SCENIC are featured by UB Engineering’s Instagram for National Robotics Week. UB features this as post of the month.

- Mar. 2023: My work on NASA mission STP-H9 SCENIC launches to the International Space Station.

- Feb. 2023: I’ve received my M.S. in Computer Science and Engineering from University at Buffalo.

- Feb. 2023: One co-authored paper accepted to the 2023 Small Satellite Conference (SmallSat): NASA SpaceCube Next-Generation Artificial-Intelligence Computing for STP-H9-SCENIC on ISS.

2022

- Aug. 2022: I start a NASA-led mentorship/technical advisement role to a six-student senior project group from Drexel University.

- Jun. 2022: One paper accepted to the 2022 IEEE International Conference on Mobile Ad Hoc and Smart Systems (MASS): PRE-SLAM: Persistence Reasoning in Edge-assisted Visual SLAM.

- May 2022: One paper accepted to the 2022 AAS/AIAA Astrodynamics Specialist Conference: You Only Crash Once: Improved Object Detection for Real-Time, Sim-to-Real Hazardous Terrain Detection and Classification for Autonomous Planetary Landings.

- May 2022: One co-authored poster presented at the 20th ACM International Conference on Mobile Systems, Applications, and Services (MobiSys): A Modular, Extensible Framework for Modern Visual SLAM Systems.

- Feb. 2022: One co-authored paper accepted to the 2022 AAS Guidance, Navigation and Control Conference: Attitude Determination via Earth Surface Feature Tracking Given Precise Orbit Knowledge.

- Sep. 2021: One paper accepted to the 2022 AIAA SciTech Forum: Efficient Feature Matching and Mapping for Terrain Relative Navigation Using Hypothesis Gating.